Analytics for Learn

Analytics for Learn is an optional service that allows you to run different reports for your courses that keep track of how your students are performing. Analytics for Learn extracts data from Blackboard, transforms it, and brings it into an analytics framework where it's combined with data from your institution's SIS system.

Important

To access these reports, your institution must have licensed, installed, and activated Analytics for Learn.

Analytics for Learn reports

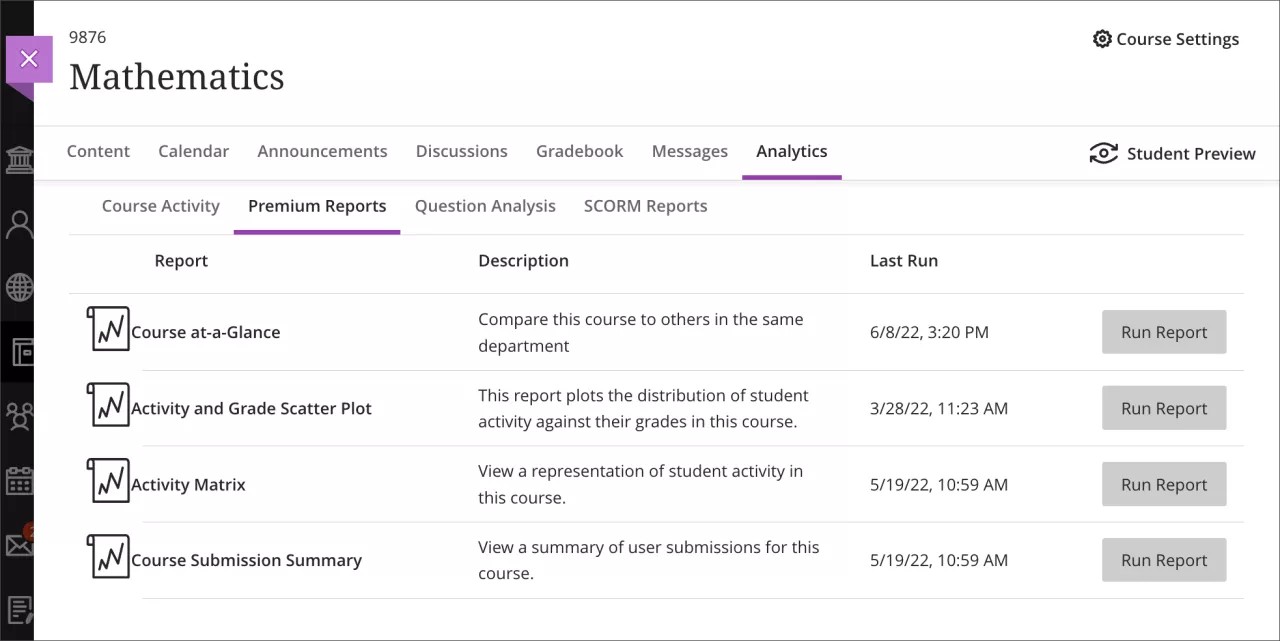

Analytics for Learn provides these reports:

Course at-a-Glance: Interactions, submissions, and time in course compared to similar courses in your department.

Activity and Grade Scatter Plot: A scatter plot of your course activity and your grades in the course.

Activity Matrix: This report compares each student's number of submissions to the average across all students in that course. The report includes a graph of submission trends over the entire term, number of submissions, the average number of submissions in the course, days since the student's last submission, and last submission type.

Course Submission Summary: Submission information for each student including assignments, tests, surveys, and graded discussions, blogs, journals, and wikis.

Access Analytics for Learn reports

Select Analytics on the navigation bar. On the Course Analytics page, select Reports. The Reports page displays a list of reports you can run, along with the report description, and the time of last run. Select Run Report to generate the analytics report. Analytics for Learn reports open in a separate tab.

If you have Predict and Analytics for Learn, you'll see options for Reports and Student Risk Reports on the Course Analytics page. Select Reports to see the Analytics for Learn options.

You can export the list of students from the reporting system and import the list into an application such as Microsoft® Excel® for further analysis.

Question Analysis tab

Question analysis provides statistics on overall performance, assessment quality, and individual questions. This data helps you recognize questions that might be poor discriminators of student performance. Question analysis is for assessments with questions. You can run a report before all submissions are in if you want to check the quality of your questions and make changes.

Use of logs and user access data for decisions regarding academic integrity

Anthology doesn’t recommend using access and activity logs as the only means of making decisions about students’ academic integrity. Analyzing individuals or small data samples for purposes of high-stakes decisions such as determining cheating is technically possible, but these types of analyses are often influenced by common data biases—in particular, confirmation bias and correlation being equated with causality. Here are two examples of where data analysis biases could lead to improper conclusions:

A student’s IP changing as a test begins could indicate cheating by having someone else take the test; it can also indicate the student needed to restart their router or that they’re using a VPN when accessing from a public network to better secure their computer.

Several students starting a test at the same time could indicate cheating by coordinating to take it as a group; it can also indicate these students simply have similar work and personal schedules, leading them to do their course work at very similar times.

For these reasons, features such as the Access Logs in Tests or other point-in-time analysis of access and activity logs are not recommended as the only means of determining academic integrity, though they might reinforce other findings in an investigation of misconduct. Access and activity logs are primarily designed for aggregated analysis and troubleshooting system and user issues. Some reports are constructed from system log files rather than transactional database tables; while rare, these report types can be more susceptible to occasional lost or duplicated data, making use for very small sample sizes such as for individuals at a point-in-time potentially inaccurate.

If you have concerns about cheating or academic integrity, we recommend that you start with the institutional office responsible for handling such incidences of misconduct. This might be an office such as academic affairs or academic technology. They generally will have policies and approved procedures for conducting investigations whether the activity in question occurred online or not. Using a single log or a small activity data set alone to identify misconduct is susceptible to several data bias types, so Anthology doesn’t recommend such use of data alone for concluding misconduct occurred.